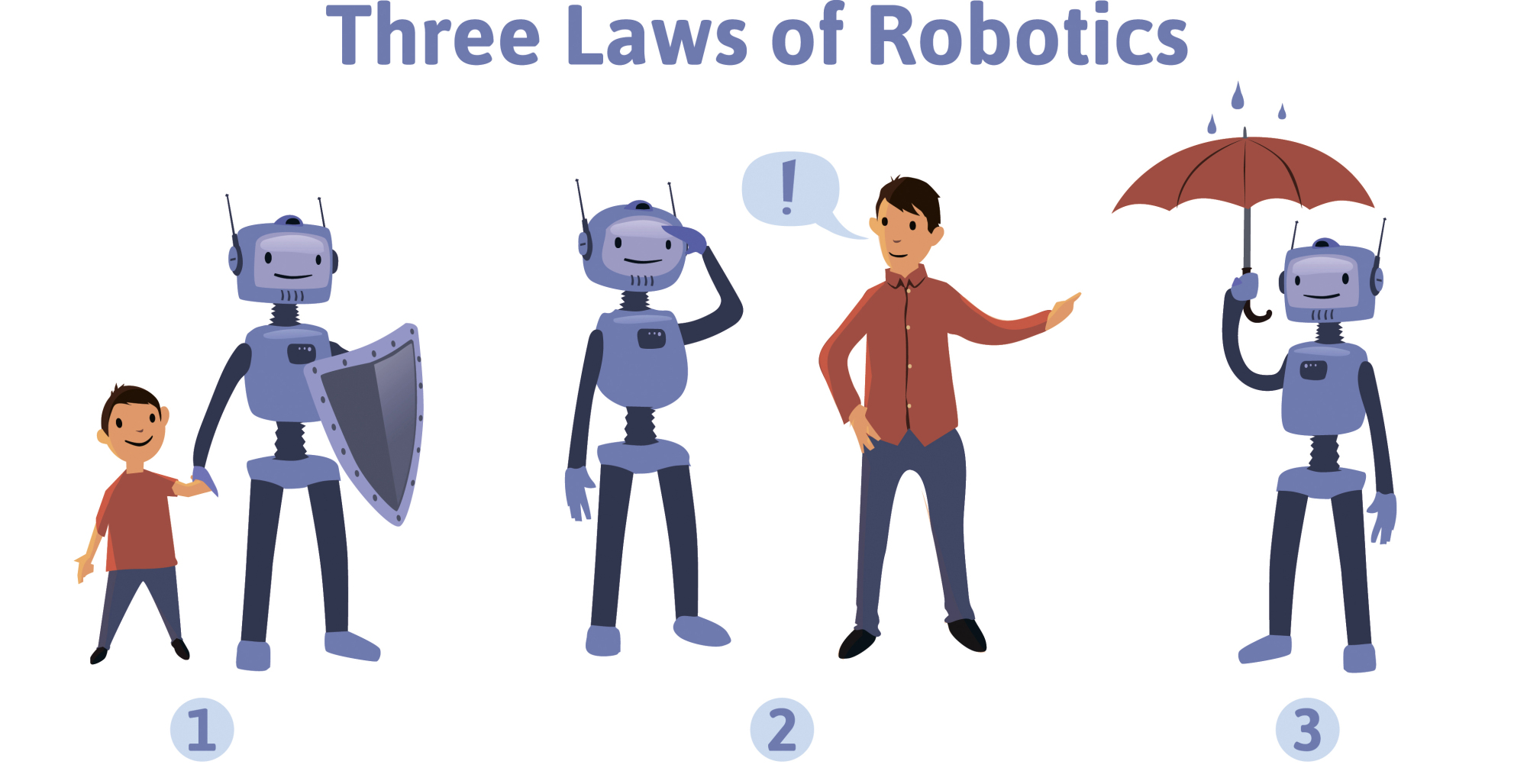

I just stayed at a hotel that had a robot trundling around the lobby. Its principal function seemed to be conveying the message, "This is a trendy hotel," but still, it is a sign of the times, one that nicely intersects with my own recent foray into the field of robot law.

I am not trendy, though, just lazy: Law is a much easier subject to write about when there is no actual law. Then you can just focus on the heady topic of what the law should be at some time in the future.

With your current subscription plan you can comment on stories. However, before writing your first comment, please create a display name in the Profile section of your subscriber account page.