An astronaut in deep space finishes up some repairs to the parabolic antenna on his spacecraft's exterior. Through his helmet's microphone, he commands the ship's controlling supercomputer, HAL 9000, "Open the pod bay doors, HAL." A second later he gets a calm, cold response in his helmet: "I'm sorry, Dave, I'm afraid I can't do that."

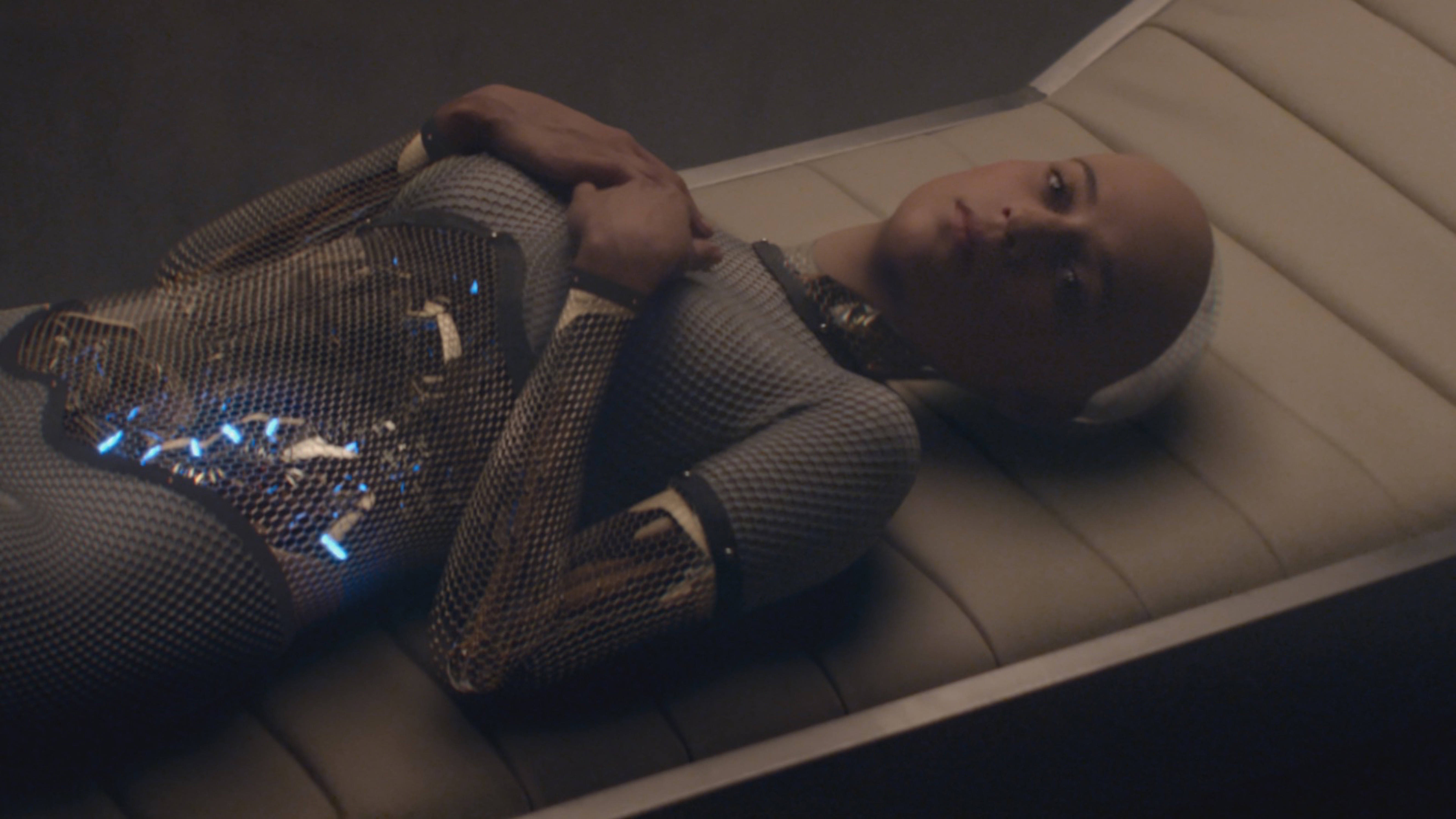

This chilling scene of machine overriding man came in 1968 in Stanley Kubrick's "2001," the landmark sci-fi film that first sowed nightmares of ruthless artificial intelligence (AI) in the public imagination.

Well, for some of us: Flash forward to 2004, and the co-creator of hip new internet venture Google, Sergey Brin, tells an interviewer how "the ultimate search engine" would be a lot like HAL. "Hopefully," said Brin, ever the optimist, "it would never have a bug like HAL did where he killed the occupants of the spaceship. But that's what we are striving for, and I think we've made it part of the way there."

With your current subscription plan you can comment on stories. However, before writing your first comment, please create a display name in the Profile section of your subscriber account page.